User:Turb0-Sunrise/Turb0pedia/Detecting AI work

In this damned era, in which human work feels suppressed by a few buttons and one huge neural network, it seems ever more impossible to detect AI work. But it's actually really fucking easy to detect AI. You see, ChatGPT is like Ross Ulbricht. He was the criminal mastermind of one of the biggest drug organizations. He was also "good" at hiding his work. The FBI and CIA spent years figuring out who created his website called The Silk Road. But, after a new officer was hired, he realized that Ross had left a few tiny bits of evidence behind that revealed his location and his name.

ChatGPT is just like that. Besides the life sentence he had to serve. And that time Trump pardoned him.

Common AI Tropes[edit | edit source]

- Sarcastic headers. Headers like "Marines — But Make it Local" obviously have some sarcasm in the header, something practically no human writer here has done.

- Titled headers. Goes hand-in-hand with the one above. Titled headers are less of a sign that it was written by AI, but it's the cherry on the cake.

- Random bolding in the text. You can clearly see this with TropicalPanther's Mappy#Origin, where random "notable" words are bolded. This is not what a human would do, they would (usually) link it.

- Who made the article? If you check who made the article, do they have a constant history of writing AI slop? If so, then look at the points above.

Detecting AI Images[edit | edit source]

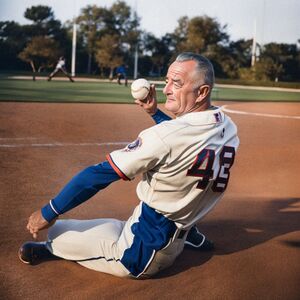

Artificially created images will often be smooth, have bad lighting, or fuck up major parts of the image. Normally, this isn't a big issue if it's small, but they can occasionally be very noticeable. Another thing that's bad is the fact that most AIs can't generate letters. There's a picture here that sums it up pretty clearly.